Pics or It Didn’t Happen: Deepfakes Explained

A lot changed when Apple unveiled the first-ever iPhone in 2007. It pushed technology forward by magnitudes, whether that was through its innovative touchscreen design, its ease of use for people of all tech literacy levels, or its remarkable ability to act as a full-blown pocket-sized computer. But of all the changes that Apple’s invention brought, few were as powerful as its little 2-megapixel camera.

In the nearly 20 years since the iPhone, we’ve carried high-quality cameras on our person at all times. This led to a new era of social media and the digitization of the real world. Suddenly, ‘pics or it didn’t happen’ was a default response to unlikely claims online, with anything captured in a photo or video considered to be irrefutable proof that what we were seeing was real.

From here, we began to establish inherent trust in handheld, amateur-looking video. Anything without the polish of a professional film studio was taken at face value, which propelled sites like Facebook and Instagram through the early to mid-2010s. But as with any agreed-upon expectations, some got to work on subverting them.

One of the earliest examples of when our trust in phone footage was subverted is in this ‘cellphone’ video, where a chimpanzee fires off a machine gun at the cameraman. This video briefly went viral before ultimately being revealed to be part of a guerrilla (or in this case, gorilla) marketing campaign for the upcoming Planet of the Apes reboot released in 2011. This fake video required the time, skills, and resources of a VFX house funded by 20th Century Fox—it wasn’t something just anyone could pull off. And while this particular example was ultimately a harmless hoax, it swung the door wide open for new subversions of our trust in authentic-looking content.

Now, there’s a new form of subversive media overtaking social media platforms and video sites: AI-powered deepfakes. In this article, we’ll explain what deepfakes are, how they’re being used to exploit, scam, and mislead people, how to spot them, and how to navigate the web in a post-AI landscape.

Deepfakes Explained

If you’re reading this, we’re assuming you’re at least somewhat up-to-date on the latest advancements in the AI space. ChatGPT reigns supreme for almost any writing-related need you may have, Midjourney lets you conjure digital hallucinations of nearly any image you can dream up, and AI ‘personalities’ are even beginning to run their own social media accounts. What you may not know much about, though, is deepfakes.

A deepfake is a special form of AI-generated content (typically video or audio) that uses a process known as ‘deep’ learning to train an AI to replicate something, most often a person. Like other forms of machine learning, AI deep learning uses examples fed to it by an AI trainer, which it eventually learns to regurgitate into similar examples that are completely AI-generated. The more examples you can provide the AI, the more accurate its generated content will be.

In practical terms, this means you can create an AI video, image, or audio clip of anyone saying anything, provided you have enough learning material to train the AI. Initially, it was unclear what purpose deepfakes had except for the novelty, with use cases like pretending you met Tom Cruise or making a famous hockey captain talk smack about the Florida Panthers. But (un)surprisingly, people fell for these deepfakes hook, line, and sinker—even without any real attempt to deceive. From here, it was only a matter of time for deepfakes to go from a quirky, Web 3.0 oddity to a full-blown weapon in the information war.

The Dangerous Depths of Deepfakes

You can no longer trust that anything you see on the internet is real without proof, and it’s all thanks to deepfakes. Unlike that Planet of the Apes chimp video, which probably took a small team of pros at least a couple of weeks to complete, you can create convincing deepfakes alone in your bedroom, with nothing but a few dozen hours of footage and a powerful graphics card.

As this technology becomes more accessible, we’ve already seen the disturbing ways it’s being used to hurt people. In 2024, one story made headlines when it was revealed that a woman had been scammed out of almost $900,000 after being made to believe she was in an online relationship with Hollywood star Brad Pitt. While you can draw your own conclusions about this person’s gullibility, bear in mind that she was receiving voice messages, videos, and even images of Pitt holding a sign with her name on it, all of which were quite convincing to the untrained eye.

We also know that deepfakes played a major role in misinformation campaigns during the last two US elections. Social media has always had a significant impact on elections (and vice versa), but this was taken to new heights, ranging from fake images of Trump being arrested to registered Democrats receiving phone calls from Joe Biden himself telling them not to vote in the upcoming primaries. Deepfake memes were also commonplace through the last election, with many who could tell the difference playing into it in a maliciously ironic way, leading those who weren’t as keen-eyed to take them at face value.

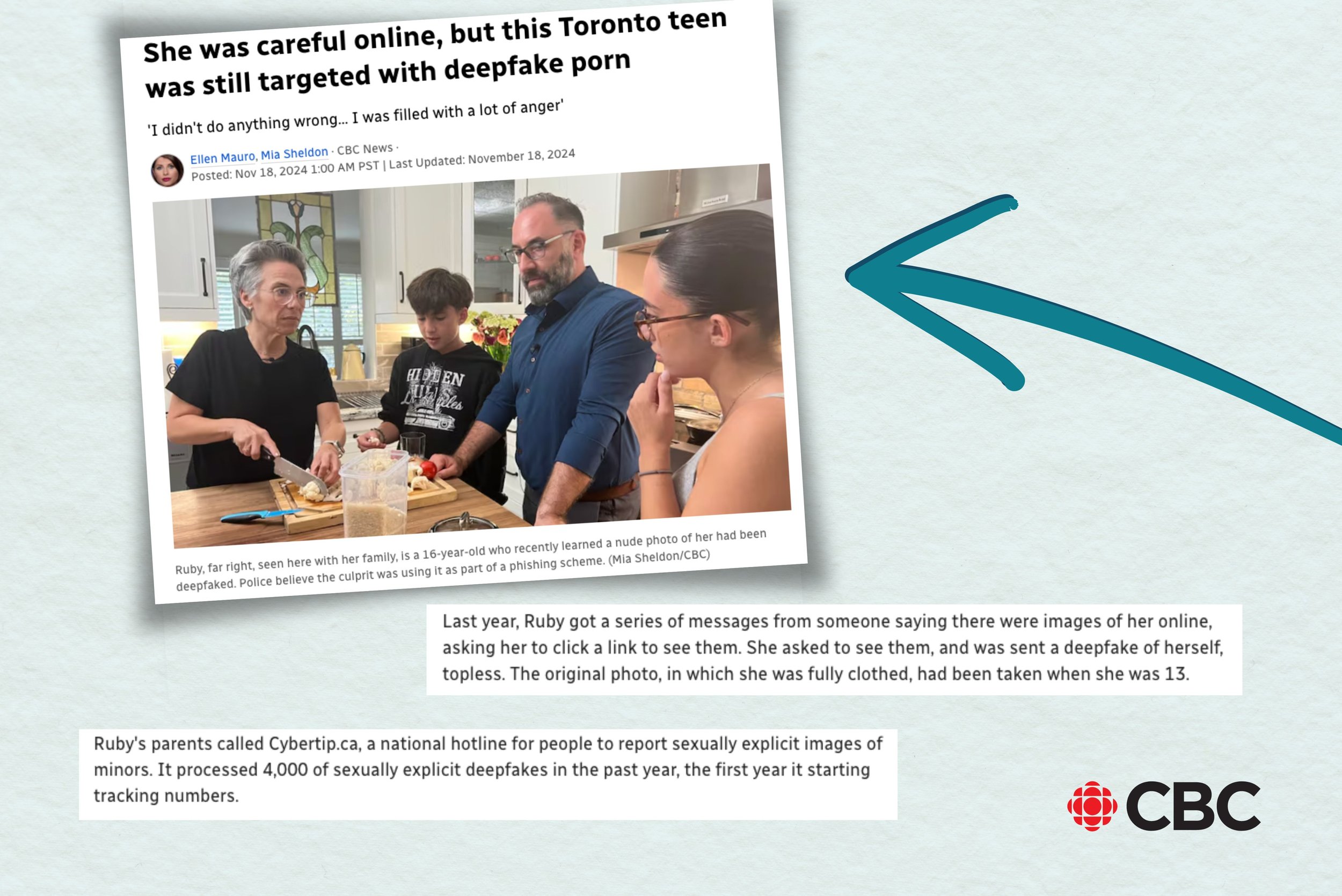

Deepfakes pose serious dangers on a more personal level. In the past year or so, there’s been a disturbing new form of cybercrime emerging involving explicit deepfake images of people made without their consent, some of whom are minors. These images and videos are sometimes distributed among predators, other times used as blackmail material against the subject.

Whether they’re used on a macro or micro scale, deepfakes set themselves apart from other forms of AI. Unlike ChatGPT and big data AI models, which have some legitimate uses, there are very few good reasons to make a deepfake, aside from, say, de-aging Robert DeNiro and Harrison Ford so that Hollywood never has to hire another actor again. (Which… is that actually a good reason?)

Aside from occasional, very specific use-cases, the main purpose of deepfakes seems to be to misinform, deceive, and mislead, making them one of the most hazardous of the net’s many dangers.

Where Are Deepfakes Showing Up?

Deepfakes can appear anywhere you can post images, video, or audio—in other words, literally anywhere on the internet. And don’t go thinking that social media platforms have your back with this stuff—despite recent legislation passed to curb deepfakes, they still run amok on TikTok, Instagram, Snapchat, and Facebook, ranging from stupid, obviously satirical videos, all the way to earnest attempts to gain your trust through digital deceit.

Deepfakes are especially concerning for young kids and older folks, many of whom don’t have the internet safety skills needed to spot these artificial images. Depending on the context, this can lead to predators gaining control over kids, elders being scammed out of thousands, or even whole groups of people getting whipped up into a political frenzy. But with this being such new tech, there’s a good chance you don’t know all the signs that you’re looking at a deepfake.

How to Spot a Deepfake (Most of the Time, For Now)

Before we get into this section, one big disclaimer: This tech is advancing rapidly, and it’s possible that deepfake videos are already out there with none of these telltale red flags, being accepted as truth as we speak.

A year from now, many of these tips will probably be outdated as AI rapidly advances beyond our wildest dreams and its generated imagery becomes more vivid and lifelike. Always use your discretion when consuming content, and seek additional proof before believing anything you see online. With that said, there are a few signs that you are almost certainly looking at a deepfake.

For videos, look for:

Unnatural body movements or posture, with motions that don’t quite match up to what’s possible in the real world

Uncanny facial movements and microexpressions (pay special attention to the throat, eyes, and lips, as these tend to look ‘off’ before anything else)

Words out of sync with the movement of the mouth

Blurry or ‘smeared’ lips during movement

Unusually inconsistent pixelization or blurriness

Jagged edges on moving figures in the video

Continuity issues, such as clothing changing styles or colour over the course of the video

For images, check for:

A glossy or airbrushed ‘artificial’ look, especially on people’s faces and bodies

Extra fingers or limbs, unnatural joint bends, or skewed faces

Distorted, garbled text that isn’t in any language

Mismatched face and body skin pigmentation

For audio, listen for:

Robotic, artificial tone of voice lacking the flow of normal human speech

A total lack of background noise

Unnatural, repetitive background noise

You may notice that these red flags are a lot less tangible and a lot more based on gut feeling and instinct. We humans are naturally good at spotting the ‘uncanny’, but if you don’t have your guard up, anyone can fall victim to a well-made deepfake. And like we mentioned, it’s possible that you’ll encounter a deepfake that is completely realistic. In these cases, you need to employ a healthy dose of common sense to determine if what you’re looking at is real.

Before taking any action on something you see online, whether it’s voting, sending money, or simply replying to a message, ask yourself why this content is being put in front of you. What’s more likely: Brad Pitt wants to be your long-distance boyfriend, or a scammer is looking for a payday? Would Joe Biden really call and tell you not to vote for him, or could a bad actor have something to gain from your inaction? Now, more than ever, we need to scrutinize, doubt, and verify the ‘information’ we get from the internet, particularly in the relatively lawless wasteland of social media and its unknowable algorithms.

How to Be Online When You Can’t Trust What You See

Like many of the negative aspects of AI, it’s looking like we’re stuck with deepfakes and their ramifications for now. Unless some laws with serious teeth come around to mitigate this damaging digital danger (and that’s a HUGE if), it’s up to us to redefine our relationship with internet content.

Educate your kids about modern internet safety, including the latest AI technology and the ways it’s being misused to harm and exploit young people. Give your parents and grandparents the layman’s explanation of deepfakes, providing useful resources and reminding them to give you a call before they send a stack to ‘Brad Pitt’. But most importantly, remind yourself that most of the internet is no longer a reliable place to get actionable information on most things. The only real answer to stopping this potentially infinite feedback loop of misinformation and outrage is to make more time to unplug.

Rather than letting AI goad you into toting a pitchfork to the Parliament building, focus on your local community, getting involved in the politics and policies that will affect your day-to-day life. Spend time fostering romantic relationships in your real life (even if it means slogging through dating apps), leaving you less vulnerable to honeypot AI scams looking to exploit your emotions for money. Teach your kids to set boundaries with their time online, drawing a clear line between the things they know are real and the eternal question mark of social media content. Try to offset your time spent ingesting content with a little time creating something. By establishing this new context and mindset around our consumption of content, news, and intimacy, we can begin to take back some of the power held by deepfakes and those who wield them.